Azure App Services - When do you plan for scaling?

Azure App Services are one of my favorite services when it comes to Microsoft Azure. They are multi purpose, can run almost anything, provide you with endless options and still the management required to keep your app running is minimal.

When you make your move the Azure and start enjoying all that the App Services platform has to offer you, there is always that one question on availability. Azure provides you with the possibility to easily scale your apps either horizontally or vertically enabling you to go pretty much any direction you want performance wise.

Vertical vs Horizontal Scaling

When you scale you basically have two options:

- Horizontal scaling: Add multiple instances (or copies) of your solution. So you end up with multiple resources sharing the load. Traffic is load balanced over the multiple instance which requires some level stateless configuration and your solutions needs to support this (or you end up with unexpected behavior);

- Vertical scaling: Add more power (generally memory, CPU and disk) to your instance. Like upgrading your machine if you will.

Long story short

When scaling up or down the actual scaling action is done within seconds. But, as you might know Web Applications take some time to start (warm up) before they are being served. In the event of a vertical scaling action, traffic is routed to a new instance almost instantly, but the app still needs to warm up and the time this takes depends on how you built your app. Once this process is completed it will function as before (provided you scaled to the right size). This is not the case when you scale horizontally: your app will be available instantly, probably before you can refresh your page.

So do you actually plan your scaling yes or no? The answer is "it depends". Technically we're not talking downtime here, we're still getting HTTP 200's and the application is served perfectly, the initial loading just takes a few seconds after scaling up or down. Whether or not scaling up and down impacts your users experience depends completely on how you build your application. For instance: if a lot of processing and logic is being executed client side (browser) chances are the impact is minimal. If you pick the right time for your scaling action, impact is minimal. But if you require non-stop, instant, super high available connectivity, then look into horizontal scaling (scaling in and scaling out). Make sure your app is suited for the platform your choose. Simply put: design for the platform you want to use and the behavior you want to see.

So when do you plan for scaling? Always. Either when designing and building your solution or when you deploy an existing solution to Azure. Take into consideration the different capabilities Azure App Services have to offer, educate yourself on the behavior of these wonderful services and plan ahead. Resource planning is not to be taken lightly as this impacts both user experience and the financial picture.

Take a look at the extensive Azure App Service documentation (https://docs.microsoft.com/en-us/azure/app-service) and best practices (https://docs.microsoft.com/en-us/azure/app-service/app-service-best-practices) to build your app the right way and avoid unexpected behavior.

What's happening when you scale up or down?

So what is the impact when you scale, what's happening in the background and when do you need to plan for this tech-magic? Always, but it depends on a lot of variables, some of which you control (architecture), some of which you need to gather telemetry for (usage of your solution) and the capabilities of the platform.

Let's take a deeper look into how the response times are actually impacted during scaling and what is happening in the background. We'll look at both scaling up and scaling out, both scaling techniques result in different behavior (and have their own use cases). To clarify: we're talking about the initial loading after the scaling action. When the process is done, load times are perfectly normal.

Test Setup

To do a fair comparison I deployed two WebApps running on Azure App Services, one running Windows and one running Linux. Both hosting a default ASP.NET core (2.1) application. Additionally I deployed a single Virtual Machine to run my tests from to eliminate any variables caused by my (sometimes) inconsistent internet connection. To be fair, you can throw much more variables into the mix and make this as extensive as you want but the test setup as described below will display how scaling might impact availability if you don't plan ahead.

My setup:

WebApp #1

- App Service Plan Tier: S1

- Instance count: 1

- OS: Linux

- Region: West Europe

- Always On Enabled

WebApp #2

- App Service Plan Tier: S1

- Instance count:1

- OS: Windows

- Region: West Europe

- Always On Enabled

Virtual Machine

- Virtual Machine size: Standard_A1

- OS: Linux (Ubuntu 18.04 LTS)

- Region: West Europe

For the actual test I'm running curl with a format file to output the required information we need. The curl output is formatted as follows:

wesley@vm-appsvctest:~$ cat curlfile

\n

http_code: %{http_code}\n

time_namelookup: %{time_namelookup}\n

time_connect: %{time_connect}\n

time_appconnect: %{time_appconnect}\n

time_pretransfer: %{time_pretransfer}\n

time_redirect: %{time_redirect}\n

time_starttransfer: %{time_starttransfer}\n

----------\n

time_total: %{time_total}\n

\n

wesley@vm-appsvctest:~$

Before, during and after the scaling operation we run curl with the required parameters as such:

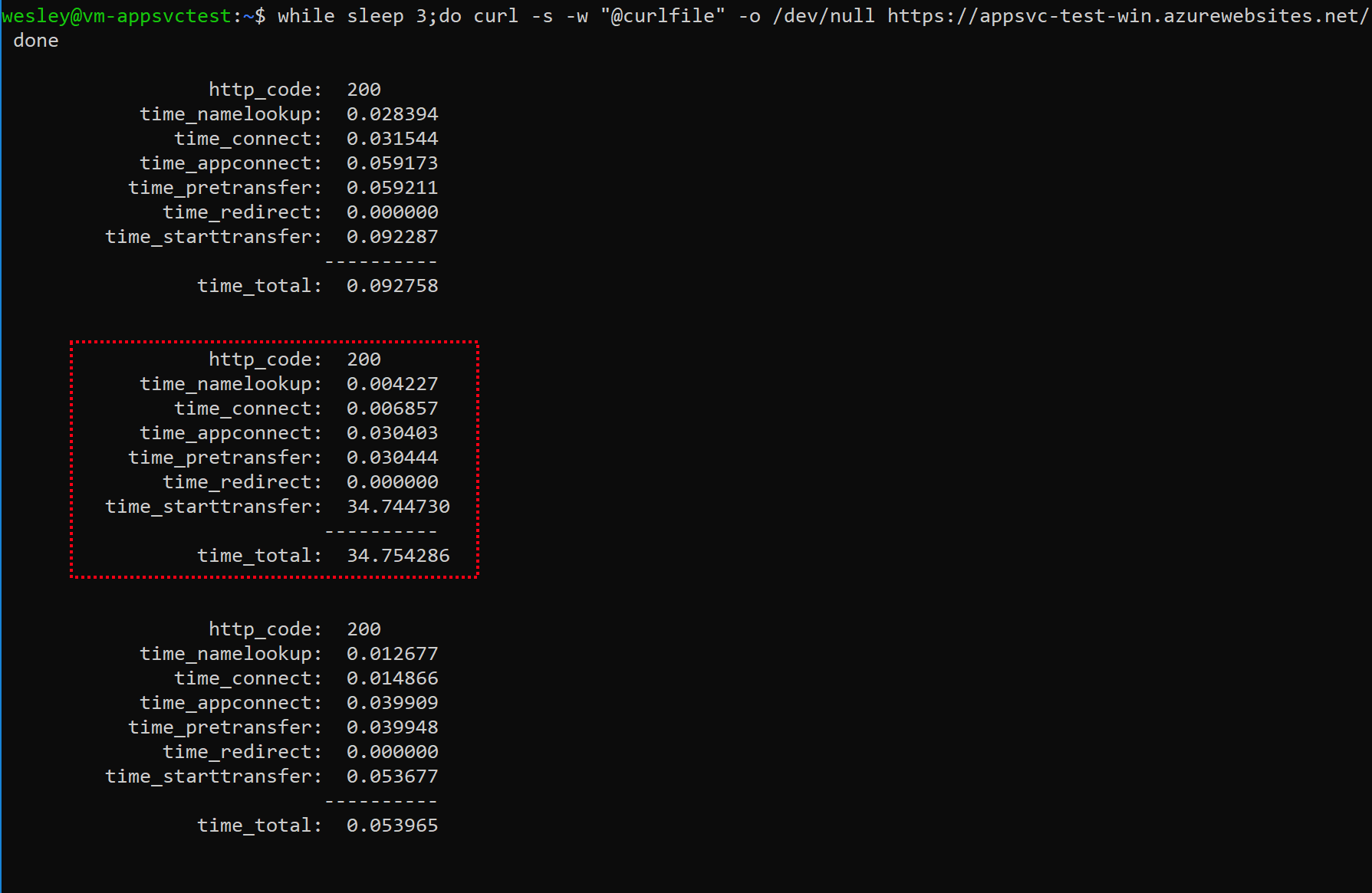

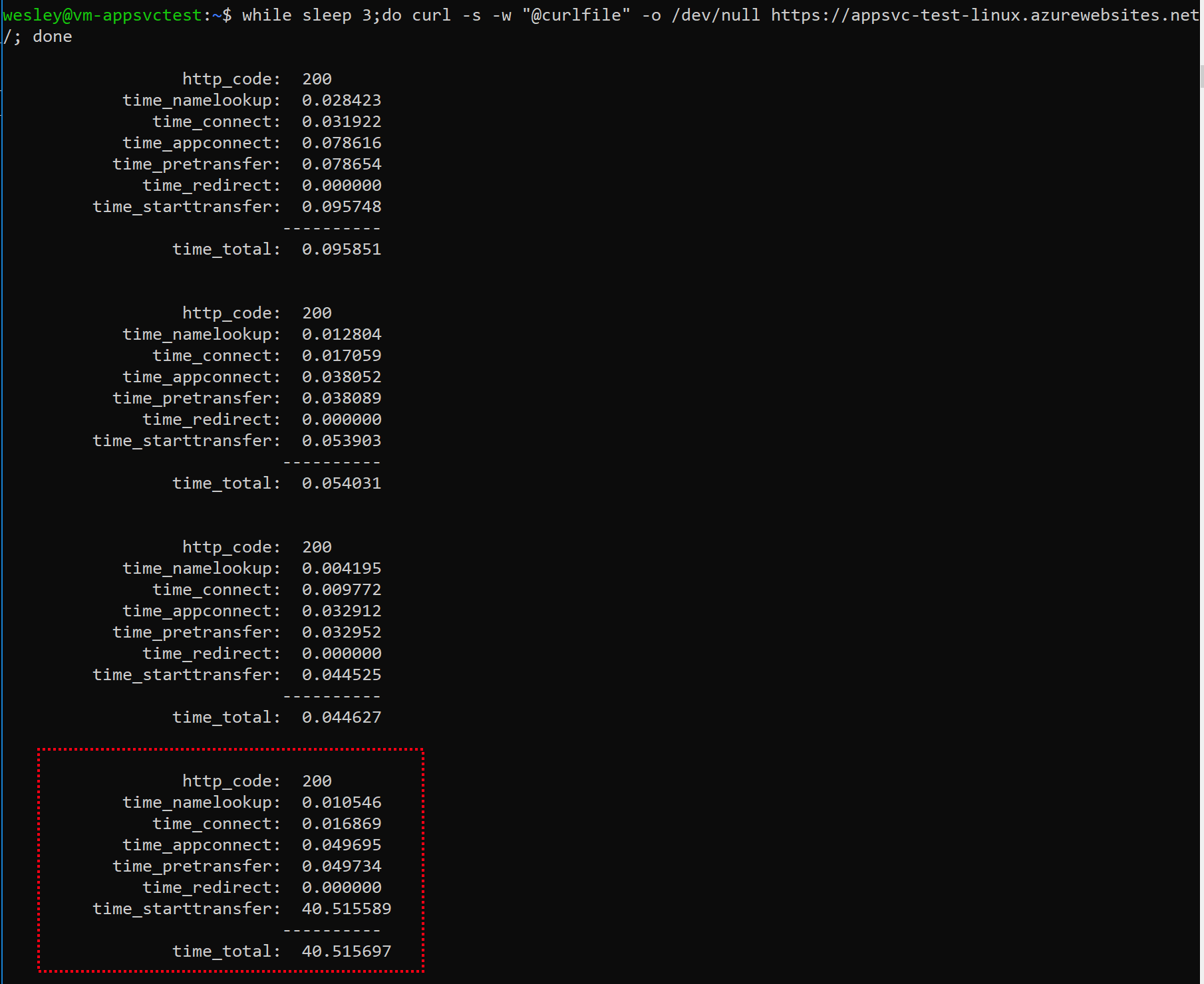

"while sleep 3;do curl -s -w "@curlfile" -o /dev/null https://appsvc-test-win.azurewebsites.net/; done"

Additionally I used Kudu (Advanced Tools) and connected to the debug console (either using PowerShell or Bash depending on the operating system).

Scaling

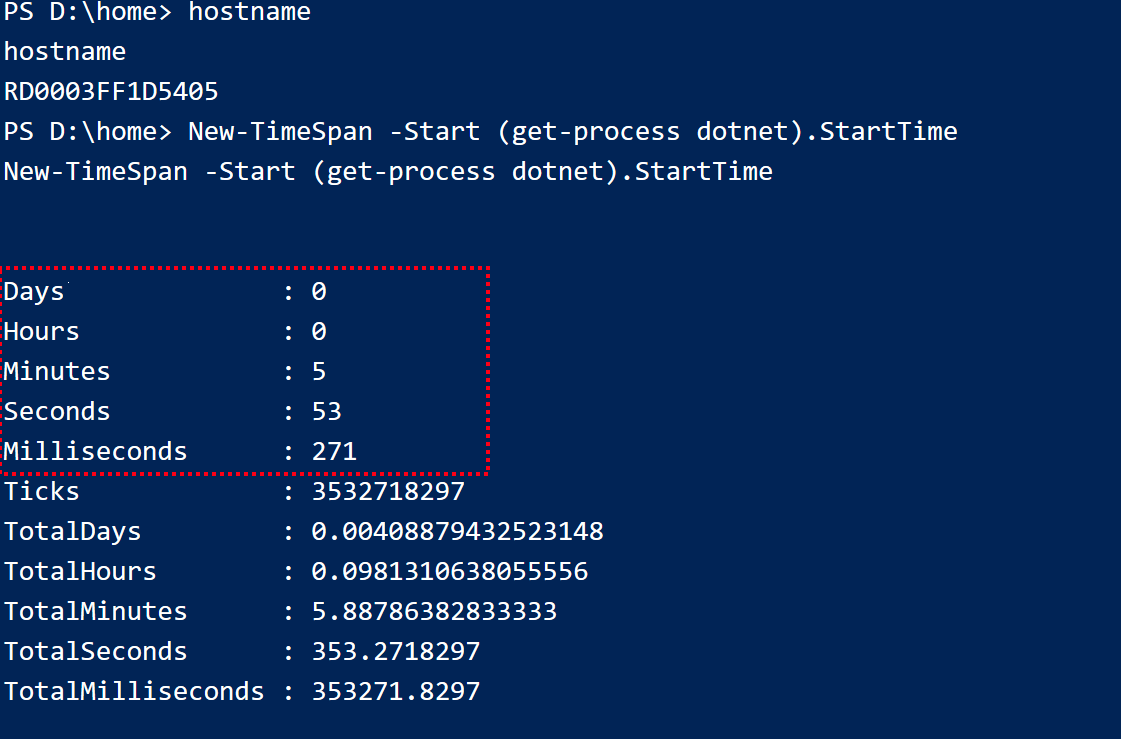

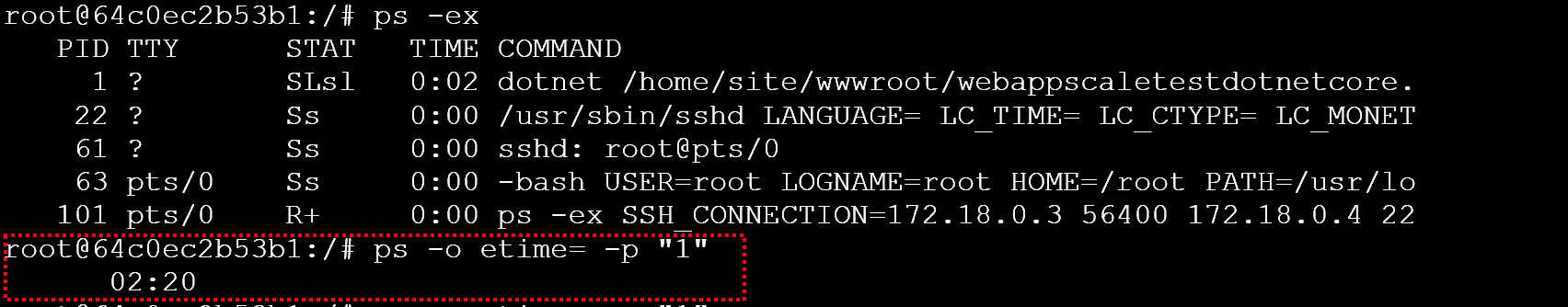

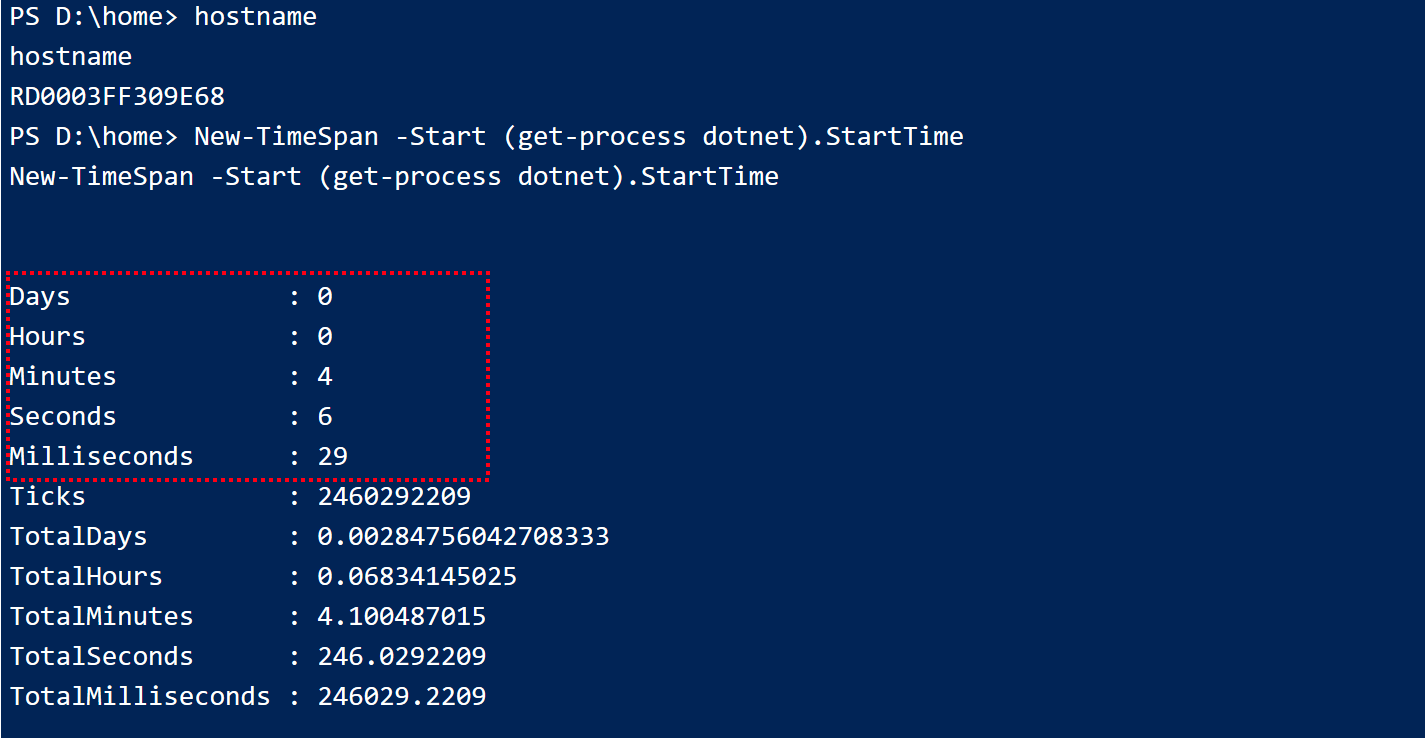

Firstly we start scaling our app from a Standard 1 (S1) tier to a Standard 2 (s2) tier, which is what we call "scaling up". Before scaling I requested the time the process serving the website (dotnet in this case) has been running so we can compare the results after scaling:

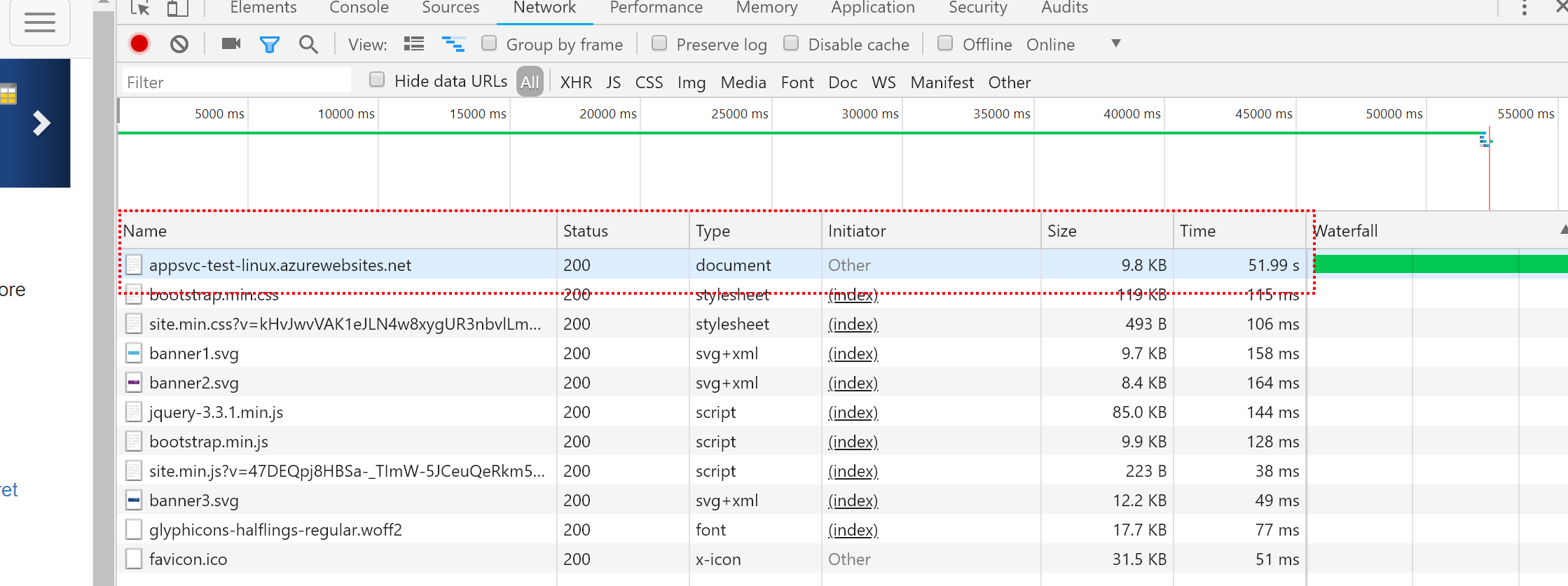

Prior to pressing the magic scaling button I ran curl to request the status of the pages every 3 seconds. As we can see, during the vertical scaling operation there is a change in response times. The results below pretty much show the average behavior (results range from 5 seconds to just under a minute). Time of the day and the load of the application may influence the results as well. I have experienced a consistent 5-7 second delay when performing the scaling actions at 6am (GMT+1):

What this shows is that the response is delayed by by approximately 30-40 seconds. It's safe to say that it doesn't really matter whether you are running on a Windows or Linux based WebApp. Regardless of the OS, there will be a delayed response as the app / instance warms up as time_starttransfer suggests. But, still no downtime and still a HTTP 200.

Manually browsing to the URL during a vertical scale action confirms this as it takes considerable time to load the application. But the page is still being served and the user is not presented with a random error (which I see happening in traditional environments far too often).

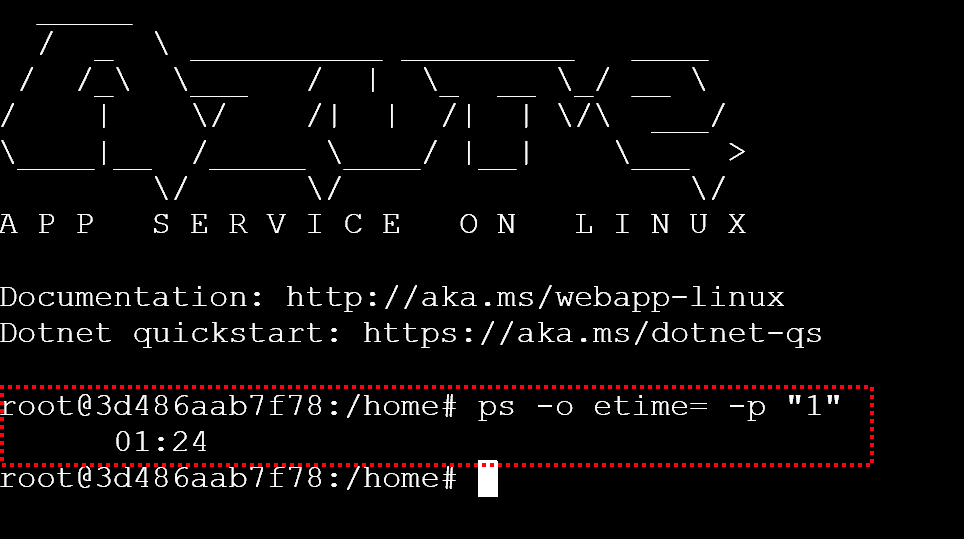

When reconnecting to the debugging consoles for both operating systems we can clearly see the differences:

- The hostname changed (new instance)

- The process just started, which is why a "warm up" is required.

What we can tell from these results is that you will experience a delayed response for some seconds as traffic is routed to a new instance with the requested size and still requires the initial warm up.

Still, this is not something we can classify as "downtime" as the actual app service is still available, it just takes some time to respond and you initiated it :)

This test was repeated with the App Services running on two instances. When scaling up the behavior was identical.

Scaling in and out

When scaling horizontally, I ran the same tests but with different results. Even though the processes still restarted, there were no noticeable drop in response times and both websites (on Windows and Linux were available instantly).

Considerations

What have we learned from this? Even though scaling up or down does have a (minimal) impact on the ability to connect to your App, we're not talking actual "downtime" here

So from a business perspective is scaling up and down something you do during the day? Well first off before you make the decision on scaling up or down, make sure you have the required telemetry to back your decision. Resource planning is not something to be taken lightly as it will greatly affect user experience and the financial picture of your solution. If you wish to scale during the day, look into horizontal scaling.

What we can tell from running the tests is that scaling up and down (moving to a different App Service Plan Tier) does have a temporary impact the response time of your WebApp during the warmup process. Even though it's just a few seconds and nothing compared to scaling on-premise resources which sometimes require physical labor, it might be something you need to plan depending on the usage of your App. Let just say there is a reason why you cannot simply automate the vertical scaling of your Web App with the flick of a switch :)

Scaling out on the other hand can be done throughout the day (hence why there is an option to automatically scale in and out based on whatever metric you need).