Accelerating AI Development and observability with Prompty

When we're building a new application that leverages solutions such as Azure OpenAI Service we're not just writing code, we're also spending a lot of time on prompt engineering. Whenever we submit a prompt to our AI Service we are carefully crafting to ensure the quality of the result is matching our expectation. And with generative AI and Large Language Models, that can be quite challenging. Often we slightly adjust our prompt and submit it over and over again. We can certainly state that the quality of our solution, when it depends on interaction with an LLM, comes down to the prompt. That also means we need to understand what's going on and that is difficult when your code gets bigger and the number of prompts increase. We need to be able to debug, adapt and improve without jumping over too many hurdles. Luckily, there is a solution that can help us: Prompty.

Prompty is built and open sourced by Microsoft and is described as "A new asset class and format for LLM prompts that aims to provide observability, understandability, and portability for developers.". Prompty comes in multiple forms: first, the tool or the Visual Studio Code extension that provides us with the observability and debugging capabilities we are looking for when it comes to prompt engineering and integrating services such as Azure OpenAI Service into our solution. Secondly we have the runtime that supports popular frameworks such as LangChain, Semantic Kernel and tools such as Prompt Flow, allowing us to abstract away all those prompts from our code, and manage them separately. Lastly we have the definition, the way we "write" things for Prompty. In this case in Markdown format.

Initial Configuration

Prompty is very easy to set up. In this example we will be using the Python and the Visual Studio Code extension. For this to work we need a couple of things:

- Azure OpenAI Service with a model deployed (GPT-4 in this case)

- Azure OpenAI Service endpoint for the language API

- Azure OpenAI Service Key

- Visual Studio Code

- Prompty Extension

- Code that sends a prompt

As a bonus you may also want to set up the Azure extension on your Visual Studio Code, make sure you are signed in and you can then leverage your credentials to authenticate with Azure OpenAI Service when you run Prompty.

However, as we are focusing on what Prompty can do and how it can help you, we are leaving complex authentications mechanisms for now and just authenticate using a key.

Having Prompty setup results in a series of new options in Visual Studio Code. To verify whether the configuration is setup correctly, right click in the file explorer (left column in Visual Studio Code) and see if the option "New Prompty" presents itself. If it does, let's just get started and click that!

Practical Use Use

Prompty has many use cases and as it is still a new solution I am expecting new use cases to be introduced rapidly. For this post we will focus on two things: Observability and debugging and abstracting away prompt configuration from our code.

Using Prompty

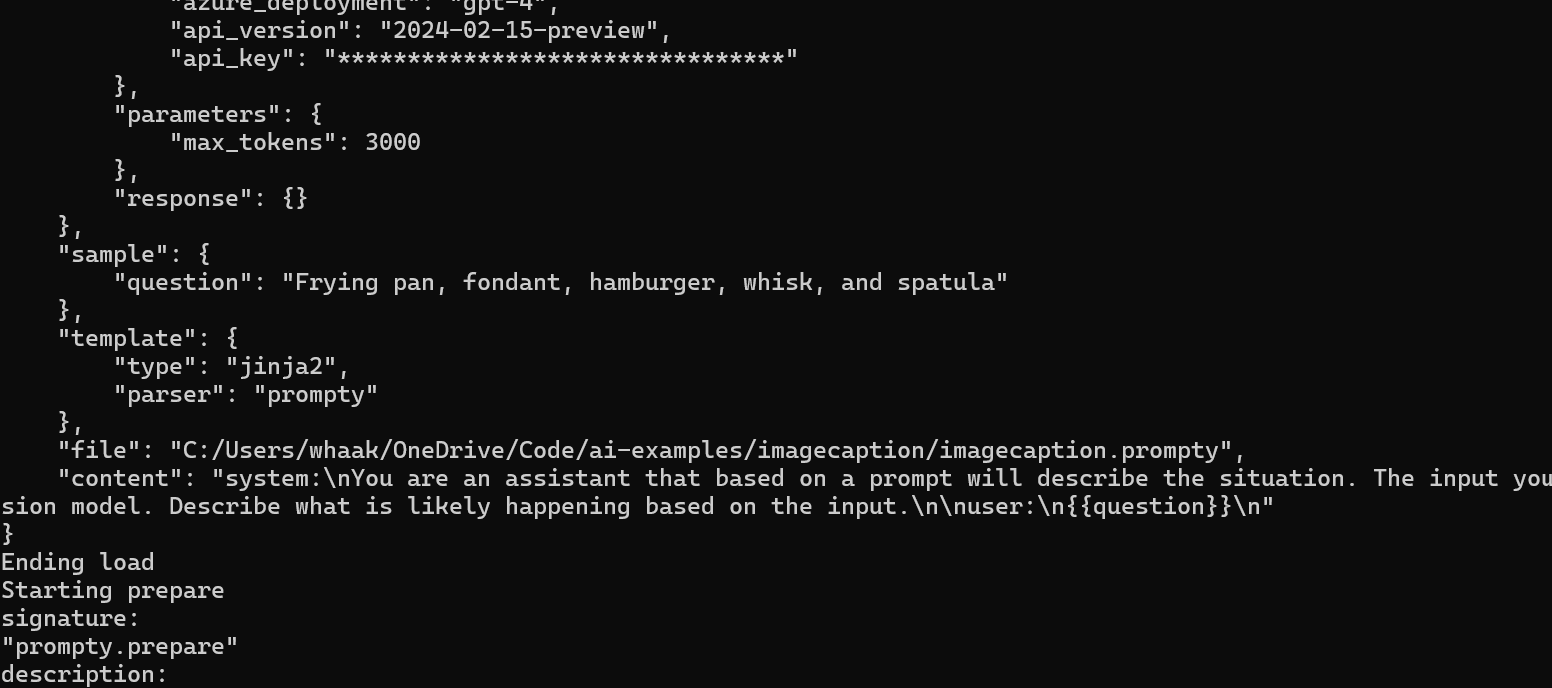

Once we have clicked "New Prompty" a new file in our file explorer presents itself with a ".Prompty" extension. Inspecting the file shows us that this is in a Markdown format and it looks like a very structured way to write and send a prompt! Definitely easier to read than prompts in code.

---

name: SceneDescriptionPrompt

description: A prompt that uses sends a question and expecting the description of an image as a return

authors:

- Wesley Haakman

model:

api: chat

configuration:

type: azure_openai

azure_endpoint: https://YourInstance.openai.azure.com

azure_deployment: gpt-4

api_version: 2024-02-15-preview

api_key: "<KEY>"

parameters:

max_tokens: 3000

sample:

question: Frying pan, fondant, hamburger, whisk, and spatula

---

system:

You are an assistant that based on a prompt will describe the situation. The input you receive are objects detected by a computer vision model. Describe what is likely happening based on the input.

user:

{{question}}

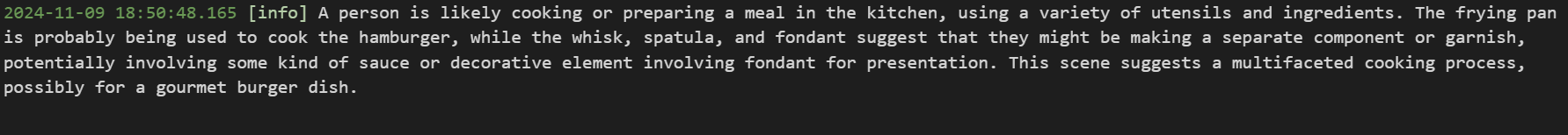

From Visual Studio Code we can now run Prompty by pressing "play" top right or by pressing "F5" and once the run succeeds we should be presented an info message containing the result:

This executed the prompt with the contents of our sample question. Great for testing but what we need is to use the contents of the Prompty file when we run a prompt from code. As we are using Python, the first thing we need to do is install the Prompty packages for Azure. We can do so by running the following command:

pip install prompty[azure]We can then import the Prompty libraries into our code and additionally configure tracing for later use:

import prompty

import prompty.azure

from prompty.tracer import trace, Tracer, console_tracer, PromptyTracer

Tracer.add("console", console_tracer)

json_tracer = PromptyTracer()

Tracer.add(PromptyTracer, json_tracer.tracer)Adding a tracer for the console ensures we get feedback inside the console when we execute our Python code. The PromptyTracer generates .tracy files inside a .runs directory of your project. These files can be interpreted by the Prompty extension in Visual Studio code.

Before we continue, a little context on my example. I have written Python code that does the following (based on a Saturday morning cake baking occurrence):

- Sends a picture to the Azure OpenAI Service Computer Vision endpoint with the DenseCaptions feature. This will return a JSON payload with a descritpion of the detected objects in the image such as: 'a tray of baking items and a box of cake', 'a cake pan with a metal object inside', 'a container of candy on a table', 'a bag of frosting on a table', 'a stack of bread on a napkin', 'a box of food on a table', 'a can of food on a table', 'a bottle with a white cap', 'a group of items on a counter', 'a small bottle of liquid next to a package of cream'

- Creates a new prompt and sends it to the Azure OpenAI Service language endpoint with a GPT-4 deployment. It does that by sending the contents of the JSON payload from the previous step as a prompt with a system prompt such as: "You are an assistant that based on a prompt will describe the situation. The input you receive are objects detected by a computer vision model. Describe what is likely happening based on the input."

- The result is: "Scene Description: It appears that someone is preparing to bake or decorate a cake. .... This setup indicates a baking or cooking session in progress with a focus on preparing a sweet treat."

This works just fine but if we look at the code, we are managing the prompt from within our Python script by defining a function like below:

def generate_scene_description(self, captions):

headers = {

"Content-Type": "application/json",

"api-key": self.api_key,

}

# Format captions as user messages

user_content = [{"type": "text", "text": caption} for caption in captions]

# Payload for the assistant's prompt

payload = {

"messages": [

{

"role": "system",

"content": [

{

"type": "text",

"text": "You are an assistant that based on a prompt will describe the situation. "

"The input you receive are objects detected by a computer vision model. "

"Describe what is likely happening based on the input."

}

]

},

{"role": "user", "content": user_content},

{"role": "assistant", "content": [{"type": "text", "text": ""}]}

],

"temperature": 0.7,

"top_p": 0.95,

"max_tokens": 800

}

# Send request to the API

try:

response = requests.post(self.endpoint, headers=headers, json=payload)

response.raise_for_status()

response_json = response.json()

assistant_message = response_json['choices'][0]['message']['content']

return assistant_message

except requests.RequestException as e:

print(f"Failed to make the request. Error: {e}")

return NoneEvery time we want to improve our prompt we need scroll up and down, modify our code try again. The more prompts we use, the more lines of code with just "text", the noisier it becomes. Prompty allows us to abstract that away. We have created the markdown file earlier that contains the Azure OpenAI Service information, variables our question and a system prompt. We can now swap out the big chunk of code above for the following:

@trace

def run(

question: any

) -> str:

result = prompty.execute(

"imagecaption.prompty",

inputs={"question": question}

)

return resultThat is way less code!

And this executes when main runs like so:

- denseCaption runs and stores the results in "captions"

- SceneDescriptionAssistant.run is triggered with the captions from the previous step as a parameter

- Results are printed

if __name__ == "__main__":

denseCaption = DenseCaption()

captions = denseCaption.generate_dense_caption()

if captions:

results = SceneDescriptionAssistant.run(captions)

if results:

print("Scene Description:", results)

We still have all the texts from the prompt, just in a different file and easier to manage and we significantly increased readability of our code.

Observability

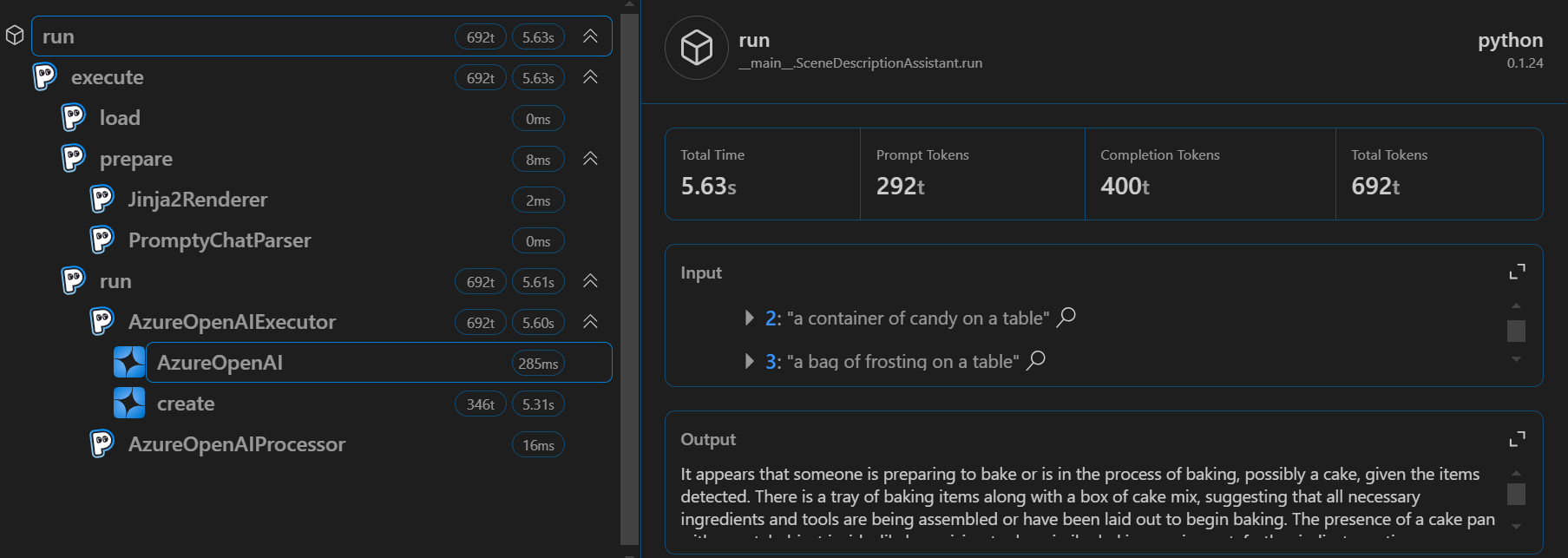

How about observability, we promised Prompty improves that too!? And so it does. In the previous steps we have defined our traces and when running our code we can see feedback in our console.

Additionally, .tracy files are created in the folder of our project. These files can be interpreted by the Prompty extension in Visual Studio code and provide great observability.

When writing code and integrating with AI technologies, insights such as the above image show are essential. This helps the developer understand what is going on behind the scenes and shortens the feedback loop significantly.

Wrapping up

Prompty is an extension, runtime and tool that helps us accelerate our AI Developments by ensuring we can write cleaner code, abstract away prompts and store and manage them in a readable format (Markdown). Leveraging the observability features allows us to generate traces that can be read from console or interpreted by the Visual Studio Code extension and presented in the UI. A valuable asset in our feedback loop.

In addition, Prompty can do much more and supports popular frameworks such as Prompt Flow, LangChain and Semantic Kernel. This post is merely showing how powerful just a simple Prompty implementation can be. As Prompty is still relatively new, I am looking forward to all developments to come!